This blog post demonstrates how to reliably and cost-effectively back up important media files like photos and videos using AWS S3 Glacier Deep Archive. Based on my own 3-2-1 backup strategy, you’ll learn how to securely store data locally on a NAS and an additional TrueNAS box, then compress and encrypt them before uploading to AWS. You’ll also get a shell script for automated uploads to S3 Glacier Deep Archive and essential tips for secure password and key management.

Why AWS S3 Glacier Deep Archive?

AWS S3 Glacier Deep Archive is an excellent solution for long-term offsite backups, especially for data you typically never need to access. Your data is stored encrypted in data centers, and AWS guarantees availability. Here are the main advantages:

- Physical separation: Data is stored far from your home, protecting it from local disasters.

- Redundancy & maintenance: AWS regularly checks and maintains the storage media.

- Cost-effective: In the EU-north region, storage costs just $0.00099 per GB per month—approximately $1 per terabyte per month.

The only downside: Restoring your data takes about 12 hours and costs around $0.02 per GB ($20 per terabyte). But since this backup is meant for absolute emergencies only, that’s acceptable.

I specifically use EU-north due to its lower carbon footprint, thanks to a higher reliance on renewable energy.

The 3-2-1 Backup Strategy

I use the well-established 3-2-1 backup strategy to keep my media secure:

- 3 Copies: Original data on smartphones, backups on a Synology NAS, and additional backups on a TrueNAS box. With the use of S3, I even have 4 copies.

- 2 Different Media Types: The Synology NAS and TrueNAS box use hard drives, while S3 uses magnetic tapes.

- 1 Offsite Copy: Compressed and encrypted data are uploaded to AWS S3 Glacier Deep Archive every six months.

This structured approach increases data security. If local drives fail—say due to lightning strikes—you still have offsite backups or alternative storage media. If the Synology NAS experiences a fatal software error after an update, the TrueNAS backup remains unaffected.

My Backup Infrastructure in Detail

Local Backup with Synology NAS and TrueNAS

All family smartphones automatically backup media to my Synology NAS via Synology Photos. The NAS uses an SHR RAID setup (one of four drives can fail without data loss). Additionally, the NAS regularly stores backups from PCs and my homelab servers.

Once a month, relevant data—especially media—is copied to a TrueNAS Scale box at home, which is connected to the network and power exclusively for this purpose and otherwise kept offline. This TrueNAS system is an older, less energy-efficient PC with several older hard drives that further enhance reliability through ZFS.

Offsite Backup with AWS S3 Glacier Deep Archive

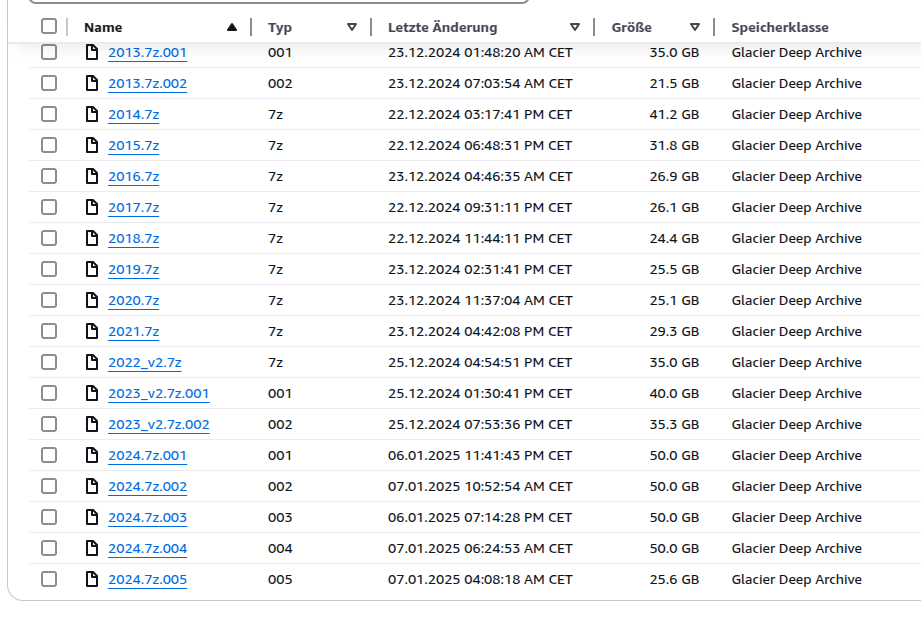

About every six months, I create an archive of my data compressed using 7zip and encrypted with AES-256, then upload it to my AWS S3 bucket. Currently, I store around 1 TB of photos and videos from more than 20 years of digital photography. Storage demands have increased significantly due to higher resolutions and especially video files from smartphones and action cameras.

As you can see here, I split larger archives into blocks of 35 to 50 GB—though that’s not strictly necessary. If additional media files from a previous year come in later, I upload a “v2” and delete the older files.

Installing and Configuring AWS CLI

To use the shell script, first install and configure the AWS CLI. Detailed instructions are available here: Install AWS CLI

Then configure the CLI using your AWS Access Key and Secret Access Key, which you can create via AWS IAM:

aws configure

Shell Script for Uploads

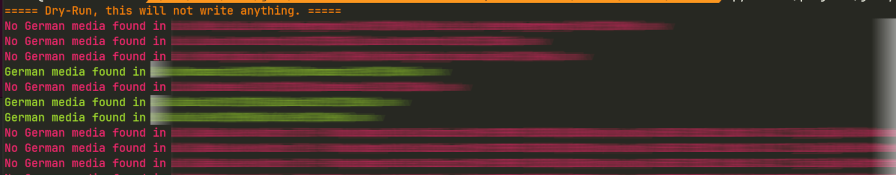

Here’s the simple shell script I use to automate archive uploads to AWS S3 Glacier Deep Archive:

#!/bin/bash

# Specify the S3 bucket name

BUCKET_NAME="s3-backup-privat"

# Find all files matching the patterns *.7z or *.7z.* in the current directory

for file in $(find ./s3 -maxdepth 1 -type f \( -name "*.7z" -o -name "*.7z.*" \)); do

file_basename=$(basename "$file")

echo "Uploading: $file_basename"

aws s3 cp "$file" "s3://$BUCKET_NAME/$file_basename" --storage-class DEEP_ARCHIVE

done

This script finds all 7z files in the S3 folder and uploads them to my “s3-backup-privat” bucket.

Lifecycle management isn’t necessary because the storage class is set directly via CLI. Upload via the Web UI is possible but less reliable for large files.

Security: Password and Key Management

Secure management of your passwords, AWS access keys, and encryption keys should always be part of your backup strategy. Ensure this information is stored separately and securely so it’s available even in catastrophic situations, such as hardware loss.

I use KeePass and synchronize the database file between all my devices, with an additional copy synchronized to a Hetzner StorageShare instance. However, this still leaves an open issue: if my PC, notebook, smartphone, and tablet were all lost simultaneously (fire, theft), I wouldn’t have immediate access to my KeePass database.

My 7zip encryption password is a random, very long character string generated and stored securely by KeePass. This ensures that, even if someone managed to access the S3 files, they couldn’t easily decrypt the archives. AES-256 encryption used by 7zip should be sufficiently strong.

Cost Management in AWS

To keep AWS costs under control, AWS allows you to set budget warnings to avoid surprises. I’d strongly recommend setting up budget alerts, as unexpected costs could arise, especially when retrieving data.

Alternatives to Cloud Backups

If cloud storage isn’t an option for you, you can alternatively store your archives on external hard drives or other media, keeping them at friends’, family’s, or in a bank’s safe deposit box. This also provides physical separation and protects your data from disasters. However, refreshing these backups requires more effort: backing up onto a second drive, visiting your external location, swapping drives, bringing the old drive home, checking the data, and updating backups again.

Conclusion

AWS S3 Glacier Deep Archive is an excellent, cost-effective solution for reliable offsite backups of your most important data. Combined with a robust local backup strategy, it provides maximum security for your valuable memories and data.